1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

473

474

475

476

477

478

479

480

481

482

483

484

485

486

487

488

489

490

491

492

493

494

495

496

497

498

499

500

501

502

|

---

# FAQ

---

The following are frequently asked questions grouped into ten categories: [General](#general), [Installation](#installation), [Physics](#physics), [Sources](#usage-sources), [Fields](#usage-fields), [Materials](#usage-materials), [Structures](#usage-structures), [Subpixel Averaging](#usage-subpixel-averaging), [Performance](#usage-performance), and [Other](#usage-other).

[TOC]

General

-------

### What is Meep?

Meep is a [free and open-source](https://en.wikipedia.org/wiki/Free_and_open-source_software) software package for [electromagnetics](https://en.wikipedia.org/wiki/Electromagnetism) simulation via the [finite-difference time-domain](https://en.wikipedia.org/wiki/Finite-difference_time-domain_method) (FDTD) method spanning a broad range of applications. The name Meep is an acronym for *MIT Electromagnetic Equation Propagation*.

### Who are the developers of Meep?

Meep was originally developed as part of graduate research at MIT. The project has been under continuous development for nearly 20 years. It is currently maintained by an active developer community on [GitHub](https://github.com/NanoComp/meep).

### Where can I ask questions regarding Meep?

The [Discussions](https://github.com/NanoComp/meep/discussions) page on GitHub can be used to ask questions regarding setting up simulations, analyzing results, installation, etc. Bug reports and new feature requests should be filed as an [issue](https://github.com/NanoComp/meep/issues) on GitHub. However, do not use issues as a general help desk if you do not understand something (use the Discussions instead).

### How can I contribute to the Meep project?

[Pull requests](https://github.com/NanoComp/meep/pulls) involving bug fixes, new features, and general improvements are welcome and can be made to the master branch on GitHub. This includes tweaks, revisions, and updates to this documentation, generated from [markdown](https://en.wikipedia.org/wiki/Markdown), which is also part of the [source repository](https://github.com/NanoComp/meep/tree/master/doc).

### Is there a technical reference for Meep?

Yes. The technical details of Meep's inner workings are described in the peer-reviewed publication [MEEP: A flexible free-software package for electromagnetic simulations by the FDTD method](http://dx.doi.org/doi:10.1016/j.cpc.2009.11.008), Computer Physics Communications, Vol. 181, pp. 687-702 (2010) ([pdf](http://ab-initio.mit.edu/~oskooi/papers/Oskooi10.pdf)). Additional information is provided in the book [Advances in FDTD Computational Electrodynamics: Photonics and Nanotechnology](https://www.amazon.com/Advances-FDTD-Computational-Electrodynamics-Nanotechnology/dp/1608071707) in Chapters 4 ("Electromagnetic Wave Source Conditions"), 5 ("Rigorous PML Validation and a Corrected Unsplit PML for Anisotropic Dispersive Media"), 6 ("Accurate FDTD Simulation of Discontinuous Materials by Subpixel Smoothing"), and 20 ("MEEP: A Flexible Free FDTD Software Package"). A [video presentation](https://www.youtube.com/watch?v=9CA949csYvM) and [slides](http://ab-initio.mit.edu/~ardavan/stuff/IEEE_Photonics_Society_SCV3.pdf) as well as a [podcast](http://www.rce-cast.com/Podcast/rce-118-meep.html) are also available.

### Where can I find a list of projects which have used Meep?

For a list of more than 2500 published works which have used Meep, see the [Google Scholar citation page](https://scholar.google.com/scholar?hl=en&q=meep+software) as well as that for the [Meep manuscript](https://scholar.google.com/scholar?cites=17712807607104508775) and the [subpixel smoothing reference](https://scholar.google.com/scholar?cites=410731148689673259). For examples based on technology applications, see this [projects page](http://www.simpetus.com/projects.html).

### Can I access Meep in the public cloud?

Yes. Using a virtual instance running Ubuntu, you can install the [Conda package for Meep](Installation.md#conda-packages).

Installation

------------

### Where can I install Meep?

Meep runs on any Unix-like operating system, such as Linux, macOS, and FreeBSD, from notebooks to desktops to supercomputers. [Conda packages](Installation.md#conda-packages) of the latest released version are available for Linux and macOS. Installing Meep from the source code requires some understanding of Unix, especially to install the various dependencies. Installation shell scripts are available for [Ubuntu 16.04 and 18.04](Build_From_Source.md#building-from-source) and [macOS Sierra](https://www.mail-archive.com/meep-discuss@ab-initio.mit.edu/msg05811.html).

### Can I install Meep on Windows machines?

Yes. For Windows 10, you can install the [Ubuntu terminal](https://www.microsoft.com/en-us/p/ubuntu/9nblggh4msv6) as an app which is based on the [Windows Subsystem for Linux](https://docs.microsoft.com/en-us/windows/wsl/about) framework and then follow the instructions for [obtaining the Conda packages](Installation.md#conda-packages) (recommended) or [building from source](Build_From_Source.md#building-from-source). Support for visualization is enabled using a browser-based [Jupyter notebook](https://jupyter.org/) which can also be installed via the Ubuntu terminal. For Windows 8 and older versions, you can use the free Unix-compatibility environment [Cygwin](http://www.cygwin.org/) following these [instructions](http://novelresearch.weebly.com/installing-meep-in-windows-8-via-cygwin.html).

### Are there precompiled binary packages for Ubuntu?

Yes. Ubuntu and Debian packages can be obtained via the package manager [APT](https://en.wikipedia.org/wiki/APT_(Debian)) as described in [Download](Download.md#precompiled-packages-for-ubuntu). However, the Meep packages for Ubuntu 16.04 ([serial](https://packages.ubuntu.com/xenial/meep) and [parallel](https://packages.ubuntu.com/xenial/meep-openmpi)) and 18.04 ([serial](https://packages.ubuntu.com/bionic/meep) and [parallel](https://packages.ubuntu.com/bionic/meep-openmpi)) are for [version 1.3](https://github.com/NanoComp/meep/releases/tag/1.3) (September 2017) which is out of date. The Meep package for Ubuntu is in the process of being updated and will likely appear in Ubuntu 19.10 as derived from the [unstable Debian package](https://packages.debian.org/unstable/meep). In the meantime, since the [Scheme interface](Scheme_User_Interface.md) is no longer being supported and has been replaced by the [Python interface](Python_User_Interface.md), you can use the [Conda packages](Installation.md#conda-packages).

### Guile is installed, but configure complains that it can't find `guile`

With most Linux distributions as well as Cygwin, packages like [Guile](http://www.gnu.org/software/guile) are split into two parts: a `guile` package that just contains the libraries and executables, and a `guile-dev` or `guile-devel` package that contains the header files and other things needed to compile programs using Guile. Usually, the former is installed by default but the latter is not. You need to install both, which means that you probably need to install `guile-dev`. Similarly for any other library packages needed by Meep.

Physics

-------

### How does the current amplitude relate to the resulting field amplitude?

There is no simple formula relating the input current amplitude ($\mathcal{J}$ in Maxwell's equations) to the resulting fields ($\mathbf{E}$) etcetera, even at the same point as the current. The exact same current will produce a different field and radiate a different total power depending upon the surrounding materials/geometry, and depending on the frequency. This is a physical consequence of the geometry's effect on the local density of states (LDOS); it can also be thought of as feedback from reflections on the source. A classic example is an antenna in front of a ground plane, which radiates very different amounts of power depending on the distance between the antenna and the plane (half wavelength vs. quarter wavelength, for example). Alternatively, if you put a current source inside a perfect electric conductor, the resulting field will be zero. Also, as the frequency of the current increases, the amplitude of the resulting field will also increase. (This is related to [Rayleigh scattering](https://en.wikipedia.org/wiki/Rayleigh_scattering) which explains why the sky is blue: scattered power increases with frequency; alternatively, the density of states increases as the frequency to the $d-1$ power in $d$ dimensions.)

For a leaky resonant mode where the fields are spatially confined and decaying away exponentially with time, the power expended by a dipole source at a given frequency and position is proportional to the ratio of the [quality factor](https://en.wikipedia.org/wiki/Q_factor) ($Q$) and modal volume ($V_m$). This is known as [Purcell enhancement](https://en.wikipedia.org/wiki/Purcell_effect) of the LDOS: the same current source in a higher Q cavity emits more power if the coupling to the mode is the same.

(On the other hand, if you were to put in a dipole source with a fixed *voltage*, instead of a fixed *current*, you would get less power out with higher $Q$. For an antenna, the Purcell enhancement factor $Q/V_m$ is proportional to its [radiation resistance](https://en.wikipedia.org/wiki/Radiation_resistance) $R$. If you fix current $I$, then power $I^2R$ increases with resistance whereas if you fix voltage $V$ then the power $V^2/R$ decreases with resistance.)

For a mathematical description, see Section 4.4 ("Currents and Fields: The Local Density of States") in [Chapter 4](http://arxiv.org/abs/arXiv:1301.5366) ("Electromagnetic Wave Source Conditions") of [Advances in FDTD Computational Electrodynamics: Photonics and Nanotechnology](https://www.amazon.com/Advances-FDTD-Computational-Electrodynamics-Nanotechnology/dp/1608071707).

If you are worried about this, then you are probably setting up your calculation in the wrong way. Especially in linear materials, the absolute magnitude of the field is useless; the only meaningful quantities are dimensionless ratios like the fractional transmittance: the transmitted power relative to the transmitted power in some reference calculation. Almost always, you want to perform two calculations, one of which is a reference, and compute the ratio of a result in one calculation to the result in the reference. For nonlinear calculations, see [Units and Nonlinearity](Units_and_Nonlinearity.md).

### How is the source current defined?

The source current in Meep is defined as a [free-charge current $\mathbf{J}$ in Maxwell's equations](Introduction.md#maxwells-equations). Meep does not simulate the driving force behind this free-charge current, nor does the current have to be placed in a conductor. Specifying a current means that somehow you are shaking a [charge](https://en.wikipedia.org/wiki/Electric_charge) at that point (by whatever means, Meep doesn't care) and you want to know the [resulting fields](#how-does-the-current-amplitude-relate-to-the-resulting-field-amplitude). In a linear system, multiplying $\mathbf{J}$ by 2 results in multiplying the fields by 2.

Note that the time integral $\int_{-\infty}^t \mathbf{J}(t') dt' = \mathbf{P}$ of the free current is a free-charge polarization $\mathbf{P}$, so if this integral is nonzero

when the current has turned off, it corresponds to an electrostatic free-charge distribution that is left in the system, which produces electrostatic electric fields (depending also on the surrounding medium, of course). For example, the Gaussian current source in Meep can have a slightly nonzero integral, and the signature of this is that a slight electrostatic field may be left over after

the simulation has run for a long time. This has no affect on Fourier-transformed (DFT) field quantities in Meep at nonzero frequencies, but it can occasionally be an annoyance.

In order to get rid of this residual electrostatic field, you can set the `is_integrated` parameter of the source time-dependence (e.g. [`GaussianSource`](Python_User_Interface.md#gaussiansource)) to `True` (default is `False`): what this does is to make the source term equal to the polarization $\mathbf{P}$ (the *integral* of $\mathbf{J}$), which enters Maxwell's equations via the relationship between $\mathbf{E}$ and $\mathbf{D}$, and thus ensures that when the source is zero, the free

polarization is also zero. This is demonstrated in the figure below showing a plot of the electric energy over the entire cell versus simulation time for a point dipole in 3d vacuum surrounded by PML: for `is_integrated=False`, we see a slight residual electric field at large times, which vanishes for `is_integrated=False`.

In the [interface](Python_User_Interface.md#source), the source currents are labeled $E_x$ or $H_y$ etc. according to what components of the electric/magnetic fields they correspond to.

### How do I compute the local density of states (LDOS) in a lossy material?

If you put a point source *inside* a lossy material (e.g., a [Lorentz-Drude metal](Materials.md#material-dispersion)), then the power expended by a dipole diverges as you increase the resolution. In the limit of infinite resolution, infinite power is absorbed. LDOS is not well defined for points inside of a lossy material.

However, LDOS is perfectly well defined for points *outside* of a lossy material. For example, you can choose a point outside of a lossy object and calculate the [LDOS](Python_User_Interface.md#ldos-spectra), and it will converge to a finite value as you increase the resolution. For an example, see [Tutorial/Local Density of States](../Python_Tutorials/Local_Density_of_States/).

### How do I set the imaginary part of ε?

If you only care about the imaginary part of $\varepsilon$ in a narrow bandwidth around some frequency $\omega$, you should set it by using the electric [conductivity](Materials.md#conductivity-and-complex). If you care about the imaginary part of $\varepsilon$ over a broad bandwidth, then for any physical material the imaginary part will be frequency-dependent and you will have to fit the data to a [Drude-Lorentz susceptibility model](Materials.md#material-dispersion).

Meep doesn't implement a frequency-independent complex $\varepsilon$. Not only is this not physical, but it also leads to both exponentially decaying and exponentially growing solutions in Maxwell's equations from positive- and negative-frequency Fourier components, respectively. Thus, it cannot be simulated in the time domain.

### Why does my simulation diverge if the permittivity ε is less than 0?

Maxwell's equations have exponentially growing solutions for a frequency-independent negative $\varepsilon$. For any physical medium with negative $\varepsilon$, there must be dispersion, and you must likewise use dispersive materials in Meep to obtain negative $\varepsilon$ at some desired frequency. The requirement of dispersion to obtain negative $\varepsilon$ follows from the [Kramers–Kronig relations](https://en.wikipedia.org/wiki/Kramers%E2%80%93Kronig_relations), and also follows from thermodynamic considerations that the energy in the electric field must be positive. For example, see [Electrodynamics of Continuous Media](https://www.amazon.com/Electrodynamics-Continuous-Media-Second-Theoretical/dp/0750626348) by Landau, Pitaevskii, and Lifshitz. At an even more fundamental level, it can be derived from passivity constraints as shown in [Physical Review A, Vol. 90, 023847 (2014)](http://arxiv.org/abs/arXiv:1405.0238).

If you solve Maxwell's equations in a homogeneous-$\varepsilon$ material at some real wavevector $\mathbf{k}$, you get a dispersion relation $\omega^2 = c^2 |\mathbf{k}|^2 / \varepsilon$. If $\varepsilon$ is positive, there are two real solutions $\omega = \pm c |\mathbf{k}| / \sqrt{\varepsilon}$, giving oscillating solutions. If $\varepsilon$ is negative, there are two imaginary solutions corresponding to exponentially decaying and exponentially growing solutions from any current source. These solutions can always be spatially decomposed into a superposition of real-$\mathbf{k}$ values via a spatial Fourier transform.

If you do a simulation of any kind in the time domain (not just FDTD), you pretty much can't avoid exciting both the decaying and the growing solutions. This is *not* a numerical instability, it is a real solution of the underlying equations for an unphysical material.

See [Materials](Materials.md#material-dispersion) for how to include dispersive materials which can have negative $\varepsilon$ and loss.

If you have nondispersive negative $\varepsilon$ *and* negative $\mu$ *everywhere*, the case of a negative-index material, then the simulation is fine, but our PML implementation doesn't currently support this situation (unless you edit the [source code](https://github.com/NanoComp/meep/blob/e3e397c485326366b0b38162493fbb297027d503/src/structure.cpp#L651) to flip the sign), and in any case such simulations are trivially [equivalent](https://math.mit.edu/~stevenj/18.369/coordinate-transform.pdf) to positive-index simulations under coordinate inversion $(x,y,z) \to (-x,-y,-z)$. However at the boundary between nondispersive negative- and positive-index materials, you will encounter instabilities: because of the way Maxwell's equations are discretized in FDTD, the $\varepsilon$ and $\mu$ are discretized on different spatial grids, so you will get a half-pixel or so of $\varepsilon\mu\lt 0$ at the boundary between negative and positive indices, which will cause the simulation to diverge. But of course, any physical negative-index metamaterial also involves dispersion.

Note also that, as a consequence of the above analysis, $\varepsilon$ must go to a positive value in the $\omega\to\pm\infty$ limit to get non-diverging solutions of Maxwell's equations. So the $\varepsilon_\infty$ in your [dispersion model](Materials.md#material-dispersion) must be positive.

### Why are there strange peaks in my reflectance/transmittance spectrum when modeling planar or periodic structures?

There are two possible explanations: (1) the simulation run time may be too short or the resolution may be too low and thus your results have not sufficiently [converged](#checking-convergence), or (2) you may be using a higher-dimensional cell with multiple periods (a supercell) which introduces unwanted additional modes due to band folding. One indication that band folding is present is that small changes in the resolution produce large and unexpected changes in the frequency spectra. Modeling flat/planar structures typically requires a 1d cell and periodic structures a single unit cell in 2d/3d. For more details, see Section 4.6 ("Sources in Supercells") in [Chapter 4](http://arxiv.org/abs/arXiv:1301.5366) ("Electromagnetic Wave Source Conditions") of [Advances in FDTD Computational Electrodynamics: Photonics and Nanotechnology](https://www.amazon.com/Advances-FDTD-Computational-Electrodynamics-Nanotechnology/dp/1608071707). Note that a 1d cell must be along the $z$ direction with only the $E_x$ and $H_y$ field components permitted.

### How do I model the solar radiation spectrum?

For simulations involving [solar radiation](https://en.wikipedia.org/wiki/Sunlight#Surface_illumination), the [reflectance/transmittance spectra](Introduction.md#transmittancereflectance-spectra) is computed using the standard procedure involving [two separate calculations (i.e., the first to obtain the input power and the second for the scattered power)](Introduction.md#transmittancereflectance-spectra). Since typical solar-cell problems are linear, the reflected/transmitted power can then be obtained by simply multiplying the reflectance/transmittance (a fractional quantity) by the [solar spectrum](https://en.wikipedia.org/wiki/Air_mass_(solar_energy)) (a dimensionful quantity).

In general, the accuracy of any type of calculation involving a *weighted* input/output spectrum can be improved and the size of the simulation reduced using a coarse, unequally spaced frequency grid which is passed as an array/list to [`add_flux`](Python_User_Interface.md#flux-spectra). This involves precomputing the frequency points and weights given the air mass spectrum as demonstrated in the notebook [Solar-weighted Gaussian Quadrature](https://nbviewer.jupyter.org/urls/math.mit.edu/~stevenj/Solar-Quadrature.ipynb?flush_cache=true).

### Are complex fields physical?

No. Unlike quantum mechanics, complex fields in classical electromagnetics are not physical; they are simply a convenient mathematical representation of sinusoidal waves using [Euler's formula](https://en.wikipedia.org/wiki/Euler%27s_formula), sometimes called a [phasor](https://en.wikipedia.org/wiki/Phasor). In a linear system, one can always take the real part at the end of the computation to obtain a physical result. When there are nonlinearities, the physical interpretation is much more non-obvious.

Note: specifying a complex `amplitude` for the `Source` object does not automatically yield complex fields. Unless the parameter `force_complex_fields=True` is specified, only the real part of the source is used. The complex amplitude is just a phase shift of the real sinusoidal source.

### How do I model incoherent spontaneous/thermal emission?

Semiclassically, [spontaneous](https://en.wikipedia.org/wiki/Spontaneous_emission) or [thermal](https://en.wikipedia.org/wiki/Thermal_radiation) emission can be modeled simply as random dipole current sources. One direct way to express this in Meep is to use a [Monte Carlo method](https://en.wikipedia.org/wiki/Monte_Carlo_method): take an ensemble average of multiple runs involving a collection of random dipole sources. See [Tutorial/Custom Source/Stochastic Dipole Emission in Light Emitting Diodes](Python_Tutorials/Custom_Source.md##stochastic-dipole-emission-in-light-emitting-diodes). For example, to model thermal radiation in linear materials, you can use a [custom source function](Python_User_Interface.md#customsource) to input [white-noise](https://en.wikipedia.org/wiki/White_noise) sources with the appropriate noise spectrum included via postprocessing by scaling the output spectrum as explained in these references: [far field](https://journals.aps.org/prl/abstract/10.1103/PhysRevLett.93.213905) and [near field](http://doi.org/10.1103/PhysRevLett.107.114302) cases. There is also a [noisy Lorentzian material](Python_User_Interface.md#noisylorentziansusceptibility-or-noisydrudesusceptibility) that can be used to model thermal fluctuations even more directly as noise in the materials themselves.

Usage: Sources

--------------

### How do I create an oblique planewave source?

An arbitrary-angle planewave with wavevector $\vec{k}$ can be generated in two different ways: (1) by setting the amplitude function [`amp_func`](Python_User_Interface.md#source) to $exp(i\vec{k}\cdot\vec{r})$ for a $d-1$ dimensional source in a $d$ dimensional cell (i.e., line source in 2d, planar source in 3d), or (2) via the [eigenmode source](Python_User_Interface.md#eigenmodesource). These two approaches generate **identical** planewaves with the only difference being that the planewave produced by the eigenmode source is unidirectional. In both cases, generating an infinitely-extended planewave requires that: (1) the source span the *entire* length of the cell and (2) the Bloch-periodic boundary condition `k_point` be set to $\vec{k}$.

The first approach involving the amplitude function is based on the principle that just as you can create a directional antenna by a [phased array](https://en.wikipedia.org/wiki/Phased_array), you can create a directional source by setting the phase of the current appropriately. Alternatively, by specifying the wavevector of the fields in $d-1$ directions of a $d$-dimensional cell, the wavevector in the remaining direction is automatically defined by the frequency $\omega$ via the dispersion relation for a planewave in homogeneous medium with index $n$: $\omega = c|\vec{k}|/n$. Note that for a pulsed source (unlike a continuous wave), each frequency component produces a planewave at a *different* angle. Also, the fields do *not* have to be complex (which would double the storage requirements).

For an example of the first approach in 1d, see [Tutorial/Basics/Angular Reflectance of a Planar Interface](Python_Tutorials/Basics.md#angular-reflectance-spectrum-of-a-planar-interface) ([Scheme version](Scheme_Tutorials/Basics.md#angular-reflectance-spectrum-of-a-planar-interface)). For 2d, see [Tutorial/Mode Decomposition/Reflectance and Transmittance Spectra for Planewave at Oblique Incidence](Python_Tutorials/Mode_Decomposition.md#reflectance-and-transmittance-spectra-for-planewave-at-oblique-incidence) ([Scheme version](Scheme_Tutorials/Mode_Decomposition.md#reflectance-and-transmittance-spectra-for-planewave-at-oblique-incidence)) as well as [examples/pw-source.py](https://github.com/NanoComp/meep/blob/master/python/examples/pw-source.py) ([Scheme version](https://github.com/NanoComp/meep/blob/master/scheme/examples/pw-source.ctl)).

For an example of the second approach, see [Tutorial/Eigenmode Source/Planewaves in Homogeneous Media](Python_Tutorials/Eigenmode_Source.md#planewaves-in-homogeneous-media) ([Scheme version](Scheme_Tutorials/Eigenmode_Source.md#planewaves-in-homogeneous-media)).

### How do I create a focused beam with a Gaussian envelope?

A focused beam with a Gaussian envelope can be created using the [`GaussianBeamSource`](Python_User_Interface.md#gaussianbeamsource) as demonstrated in this [script](https://github.com/NanoComp/meep/blob/master/python/examples/gaussian-beam.py). The following are four snapshots of the resulting field profile generated using a line source (red line) in 2d for different values of the beam waist radius/width $w_0$ (`beam_w0`), propagation direction $\vec{k}$ (`beam_kdir`), and focus (`beam_x0`):

Note: beams in a homogeneous material do *not* have a fixed width in Maxwell's equations; they always spread out during propagation (i.e., diffraction). The [numerical aperture (NA)](https://en.wikipedia.org/wiki/Gaussian_beam#Beam_divergence) of a Gaussian beam with waist radius $w$ and vacuum wavelength $\lambda$ within a medium of index $n$ is $n\sin(\lambda/(\pi nw))$.

### How do I create a circularly polarized planewave source in cylindrical coordinates?

A circularly polarized planewave in [cylindrical coordinates](Python_Tutorials/Cylindrical_Coordinates.md) corresponds to $\vec{E}=(\hat{r}+i\hat{\phi})\exp(i\phi)$. This can be created using a constant $E_r$ (radial) current source with `amplitude`=1 and a constant $E_p$ (azimuthal) current source with `amplitude`=0+1i as well as `m`=1.

### How do I model a moving point charge?

You can use an instantaneous [`ContinuousSource`](Python_User_Interface.md#continuoussource) with large wavelength (or nearly-zero frequency). This is analogous to a [direct current](https://en.wikipedia.org/wiki/Direct_current). You will also need to create a [run function](Python_User_Interface.md#run-functions) which contains [`change_sources`](Python_User_Interface.md#reloading-parameters) and specify the `center` property of the point source to be time dependent. As an example, the following image demonstrates [Cherenkov radiation](https://en.wikipedia.org/wiki/Cherenkov_radiation) involving a moving point charge with [superluminal phase velocity](https://en.wikipedia.org/wiki/Faster-than-light#Phase_velocities_above_c) (see [examples/cherenkov-radiation.py](https://github.com/NanoComp/meep/blob/master/python/examples/cherenkov-radiation.py)).

### Why doesn't the continuous-wave (CW) source produce an exact single-frequency response?

The [ContinuousSource](Python_User_Interface.md#continuoussource) does not produce an exact single-frequency response $\exp(-i\omega t)$ due to its [finite turn-on time](https://github.com/NanoComp/meep/blob/master/src/sources.cpp#L104-L122) which is described by a hyperbolic-tangent function. In the asymptotic limit, the resulting fields are the single-frequency response; it's just that if you Fourier transform the response over the *entire* simulation you will see a finite bandwidth due to the turn-on.

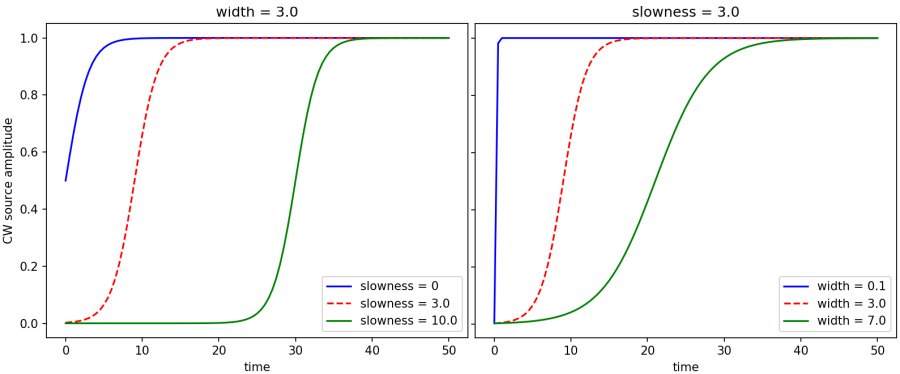

If the `width` is 0 (the default) then the source turns on sharply which creates high-frequency transient effects. Otherwise, the source turns on with a shape of (1 + tanh(t/`width` - `slowness`))/2. That is, the `width` parameter controls the width of the turn-on. The `slowness` parameter controls how far into the exponential tail of the tanh function the source turns on. The default `slowness` of 3.0 means that the source turns on at $(1 + \tanh(-3))/2 = 0.00247$ of its maximum amplitude. A larger value for `slowness` means that the source turns on even more gradually at the beginning (i.e., farther in the exponential tail). The effect of varying the two parameters `width` and `slowness` independently in the turn-on function is shown below.

Note: even if you have a continuous wave (CW) source at a frequency $\omega$, the time dependence of the electric field after transients have died away won't necessarily be $cos(\omega t)$, because in general there is a phase difference between the current and the resulting fields. In general for a CW source you will eventually get fields proportional to $cos(\omega t-\phi)$ for some phase $\phi$ which depends on the field component, the source position, and the surrounding geometry.

### Why does the amplitude of a point dipole source increase with resolution?

The field from a point source is singular — it blows up as you approach the source. At any finite grid resolution, this singularity is truncated to a finite value by the grid discretization but the peak field at the source location increases as you increase the resolution.

### Is a narrow-bandwidth Gaussian pulse considered the same as a continuous-wave (CW) source?

No. A narrow-bandwidth Gaussian is still a Gaussian: it goes to zero at both the beginning and end of its time profile unlike a continuous-wave (CW) source which oscillates indefinitely (but has a [finite turn-on](#why-doesnt-the-continuous-wave-cw-source-produce-an-exact-single-frequency-response)). Assuming you have linear materials, you should get the same results if you use a narrow- or broad-band pulse and look at a single frequency component of the Fourier transform via e.g. [`dft_fields`](Python_User_Interface.md#field-computations). The latter has the advantage that it requires a shorter simulation for the fields to decay away due to the [Fourier Uncertainty Principle](https://en.wikipedia.org/wiki/Fourier_transform#Uncertainty_principle). Note also that an almost *zero*-bandwidth Gaussian will produce high-frequency spectral components due to its abrupt turn on and off which are poorly absorbed by PML.

### How do I create a chirped pulse?

You can use a [`CustomSource`](Python_User_Interface.md#customsource) to define an arbitrary time-profile for the [`Source`](Python_User_Interface.md#source) object. As an example, the following snapshots of the out-of-plane electric field demonstrates a [linear-chirped pulse](https://www.rp-photonics.com/chirp.html) planewave propagating from the left to the right with higher frequencies (smaller wavelengths) at the front (i.e., a down chirp). For the simulation script, see [examples/chirped_pulse.py](https://github.com/NanoComp/meep/blob/master/python/examples/chirped_pulse.py).

Usage: Fields

-------------

### Why are the fields blowing up in my simulation?

Instability in the fields is likely due to one of five causes: (1) [PML](Python_User_Interface.md#pml) overlapping dispersive materials based on a [Drude-Lorentzian susceptibility](Python_User_Interface.md#lorentziansusceptibility) in the presence of [backward-wave modes](https://journals.aps.org/pre/abstract/10.1103/PhysRevE.79.065601) (fix: replace the PML with an [Absorber](Python_User_Interface.md#absorber)), (2) the frequency of a Lorentzian susceptibility term is *too high* relative to the grid discretization (fix: increase the `resolution` and/or reduce the `Courant` factor), (3) a material with a [wavelength-independent negative real permittivity](#why-does-my-simulation-diverge-if-0) (fix: [fit the permittivity to a broadband Drude-Lorentzian susceptibility](#how-do-i-import-n-and-k-values-into-meep)), (4) a grid voxel contains *more than one* dielectric interface (fix: turn off subpixel averaging), or (5) a material with a *wavelength-independent* refractive index between 0 and 1 (fix: reduce the `Courant` factor; alternatively, [fit the permittivity to a broadband Drude-Lorentzian susceptibility](#how-do-i-import-n-and-k-values-into-meep)).

Note: when the fields blow up, the CPU *slows down* due to [floating-point exceptions in IEEE 754](https://en.wikipedia.org/wiki/IEEE_754#Exception_handling). Also, Meep automatically checks the fields at the cell origin after every timestep and [aborts the simulation if the electric energy density has diverged](https://github.com/NanoComp/meep/blob/master/src/step.cpp#L97-L98).

### How do I compute the steady-state fields?

The "steady-state" response is defined as the exp(-iωt) response field (ω=2πf is the angular frequency) from an exp(-iωt) source after all transients have died away. There are three different approaches for computing the steady-state fields: (1) use a continuous-wave (CW) source via [`ContinuousSource`](Python_User_Interface.md#continuoussource) with [smooth turn-on](#why-doesnt-the-continuous-wave-cw-source-produce-an-exact-single-frequency-response) and run for a long time (i.e., ≫ 1/f), (2) use the [frequency-domain solver](#what-is-meeps-frequency-domain-solver-and-how-does-it-work), or (3) use a broad-bandwidth pulse (which has [short time duration](#is-a-narrow-bandwidth-gaussian-pulse-the-same-as-a-continuous-wave-cw-source)) via [`GaussianSource`](Python_User_Interface.md#gaussiansource) and compute the Fourier-transform of the fields via [`add_dft_fields`](Python_User_Interface.md#field-computations). Often, (2) and (3) require fewer timesteps to converge than (1). Note that Meep uses real fields by default and if you want complex amplitudes, you must set `force_complex_fields=True`.

### How do I compute S-parameters?

Meep contains a [mode-decomposition feature](Mode_Decomposition.md) which can be used to compute complex-valued [S-parameters](https://en.wikipedia.org/wiki/Scattering_parameters). An example is provided for a [two-port network](https://en.wikipedia.org/wiki/Two-port_network#Scattering_parameters_(S-parameters)) based on a silicon directional coupler in [Tutorial/GDSII Import](/Python_Tutorials/GDSII_Import/). Additional examples are available for a [waveguide mode converter](Python_Tutorials/Mode_Decomposition.md#reflectance-of-a-waveguide-taper) and [subwavelength grating](Python_Tutorials/Mode_Decomposition.md#phase-map-of-a-subwavelength-binary-grating).

### Harminv is unable to find the resonant modes of my structure

There are six possible explanations for why [Harminv](Python_User_Interface.md#harminv) could not find the resonant modes: (1) the run time was not long enough and the decay rate of the mode is so small that the Harminv data was mainly noise, (2) the Harminv call was not wrapped in [`after_sources`](Python_User_Interface.md#controlling-when-a-step-function-executes); if Harminv overlaps sources turning on and off it will get confused because the sources are not exponentially decaying fields, (3) the Harminv monitor is near the mode's nodal point (e.g., in a symmetry plane), (4) there are field instabilities where the fields are actually [blowing up](#why-are-the-fields-blowing-up-in-my-simulation); this may result in Harminv returning a negative [quality factor](https://en.wikipedia.org/wiki/Q_factor), (5) the decay rate of the mode is too fast; Harminv discards any modes which have a quality factor less than 50 where the leaky-mode approximation of the modes as perfectly exponentially decaying (i.e. a Lorentzian lineshape) begins to break down (and thus Harminv won't likely find any modes inside a [metal](Materials.md#material-dispersion)), or (6) the PML overlaps the non-radiated/evanescent field and has introduced artificial absorption effects in the local density of states (LDOS).

Harminv will find modes in perfect-conductor cavities (i.e. with no loss) with a quality factor that is very large and has an arbitrary sign; it has no way to tell that the decay rate is zero, it just knows it is very small.

Harminv becomes less effective as the frequency approaches zero, so you should specify a non-zero frequency range.

In order to resolve two closely-spaced modes, in general it is preferable to run with a narrow bandwidth source around the frequency of interest to excite/analyze as few modes as possible and/or increase the run time to improve the frequency resolution. If you want to analyze an arbitrary spectrum, just use the Fourier transform as computed by [`dft_fields`](Python_User_Interface.md#field-computations).

For a structure with two doubly-degenerate modes (e.g., a dipole-like mode or two counter-propagating modes in a ring resonator), the grid discretization will almost certainly break the degeneracy slightly. In this case, Harminv may find two *distinct* nearly-degenerate modes.

Note: any real-valued signal consists of both positive and negative frequency components (with complex-conjugate amplitudes) in a Fourier domain decomposition into complex exponentials. Harminv usually is set up to find just one sign of the frequency, but occasionally converges to a negative-frequency component as well; these are just as meaningful as the positive frequencies.

### How do I compute the effective index of an eigenmode of a lossy waveguide?

To compute the [effective index](https://www.rp-photonics.com/effective_refractive_index.html), you will need to first compute the *complex* $\omega$ (the loss in time) for a *real* $\beta$ (the propagation constant) and then convert this quantity into a loss in space (*complex* $\beta$ at a *real* $\omega$) by dividing by the group velocity $v_g$. This procedure is described in more detail below.

To obtain the loss in time, you make your computational cell a cross-section of your waveguide (i.e. 2d for a waveguide with constant cross-section), and set Bloch-periodic boundary conditions via the `k_point` input variable — this specifies your (real) $\beta$. You then treat it exactly the same as a [resonant-cavity problem](Python_Tutorials/Resonant_Modes_and_Transmission_in_a_Waveguide_Cavity.md#resonant-modes): you excite the system with a short pulse source, monitor the field at some point, and then analyze the result with [Harminv](Python_User_Interface.md#harminv); all of which is done if you call `run_kpoints`. This will give you the complex $\omega$ at the given $\beta$, where the imaginary part is the loss rate in time. Note: the loss in a uniform waveguide, with no absorption or disorder, is zero, even in the discretized system.

That is, you have $\omega(\beta_r) = \omega_r + i\omega_i$ where the subscripts $r$ and $i$ denote real and imaginary parts. Now, what you want to do is to get the complex $\beta$ at the real $\omega$ which is given by: $\beta(\omega_r) = \beta_r - i\omega_i/v_g + \mathcal{O}(\omega_i^2)$. That is, to first order in the loss, the imaginary part of $\beta$ (the propagation loss) at the real frequency $\omega_r$ is given just by dividing $\omega_i$ by the group velocity $v_g = \frac{d\omega}{d\beta}$, which you can [get from the dispersion relation in the absence of loss](#how-do-i-compute-the-group-velocity-of-a-mode). This relationship is just a consequence of the first-order Taylor expansion of the dispersion relation $\omega(\beta)$ in the complex plane.

This analysis is only valid if the loss is small, i.e. $\omega_i \ll \omega_r$. This should always be the case in any reasonable waveguide, where the light can travel for many wavelengths before dissipating/escaping. If you have extremely large losses so that it only propagates for a few wavelengths or less, then you would have to treat the problem differently — but in this case, the whole concept of a "waveguide mode" is not clearly defined.

### How do I compute the group velocity of a mode?

There are two possible approaches for manually computing the [group velocity](https://en.wikipedia.org/wiki/Group_velocity) $\nabla_\textbf{k}\omega$: (1) compute the [dispersion relation](Python_Tutorials/Resonant_Modes_and_Transmission_in_a_Waveguide_Cavity.md#band-diagram) $\omega(\textbf{k})$ using [Harminv](Python_User_Interface.md#harminv), fit it to a polynomial, and calculate its derivative using a [finite difference](https://en.wikipedia.org/wiki/Finite_difference) (i.e. $[ \omega(\textbf{k} + \Delta \textbf{k}) - \omega(\textbf{k}-\Delta \textbf{k}) ] / (2\|\Delta \textbf{k}\|)$, or (2) excite the mode using a narrowband pulse and compute the ratio of the Poynting flux to electric-field energy density.

For eigenmodes obtained using [mode decomposition](Python_User_Interface.md#mode-decomposition), the group velocities are computed automatically along with the mode coefficients.

### How do I compute the time average of the harmonic fields?

For a linear system, you can use a [ContinuousSource](Python_User_Interface.md#continuoussource) with `force_complex_fields=True` and time-step the fields until all transients have disappeared. Once the fields have reached steady state, the instantaneous intensity $|E|^2 /2$ or [Poynting flux](https://en.wikipedia.org/wiki/Poynting_vector#Time-averaged_Poynting_vector) $\Re{[E^{*}\times H]}/2$ is equivalent to the time average. If you don't use complex fields, then these are just the instantaneous values at a given time, and will oscillate. An alternative to time-stepping is the [frequency-domain solver](Python_User_Interface.md#frequency-domain-solver).

### Why are the fields not being absorbed by the PML?

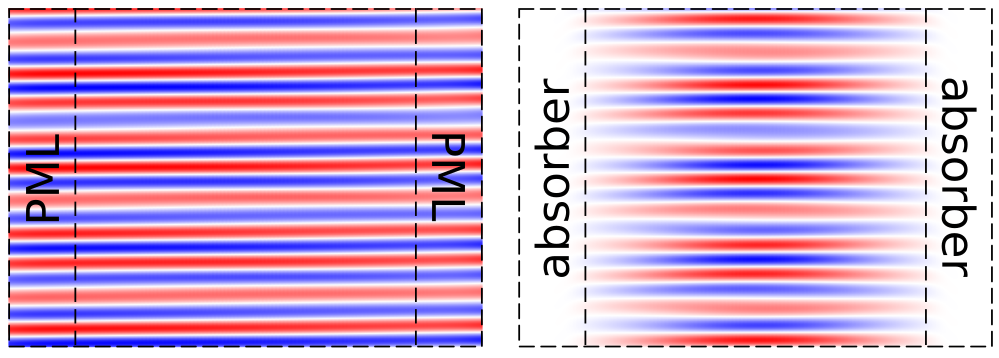

The decay coefficient of the fields within any PML contains a $\cos(\theta)$ factor where $\theta$ is the incidence angle ($\theta=0^{\circ}$ is normal incidence). The decay therefore becomes *slower* as glancing incidence ($\theta=90^{\circ}$) is approached. This is true in the continuum limit and can be demonstrated by verifying that the reflections from the PML do *not* change with resolution. Otherwise, if the reflection decreases with increasing resolution then it may be due to transition reflections (i.e., the impedance mismatch at the PML interface) which can also be reduced by making the PML thicker instead of increasing the resolution. Transition reflections also increase as glancing incidence is approached, because at glancing incidence the phase-velocity mismatch between incident and reflected waves goes to zero as described in [Optics Express, Vol. 16, pp. 11376-92 (2008)](http://www.opticsinfobase.org/abstract.cfm?URI=oe-16-15-11376). Glancing-angle fields commonly arise in simulations where one direction is periodic and the other is terminated by a PML (such as in [diffraction gratings](Python_Tutorials/Mode_Decomposition.md#diffraction-spectrum-of-a-binary-grating)), in which case you can have spurious solutions that travel *parallel* to the PML interface; a workaround is to use a thicker non-PML [absorber](Python_User_Interface.md#absorber). This is demonstrated in the figure below by the glancing-angle fields remaining in the cell after a [source planewave](Python_Tutorials/Eigenmode_Source.md#planewaves-in-homogeneous-media) at 45° has long been turned off: the PML (left inset) is unable to absorb these fields unlike the absorber (right inset).

### How do I compute the modes of a non-orthogonal (i.e., triangular) lattice?

Meep does not support non-rectangular unit cells. To model a triangular lattice, you have to use a supercell. This will cause the band structure to be [folded](#why-are-there-strange-peaks-in-my-reflectancetransmittance-spectrum-when-modeling-planar-or-periodic-structures). However, if you take your point source and replicate it according to the underlying triangular lattice vectors, with the right phase relationship according to the Bloch wavevector, then it should excite the folded bands only with very low amplitude as reported by [Harminv](Python_User_Interface.md#harminv). Also, for every Harminv point you put in, you should analyze the fields from the periodic copies of that point (with the periodicity of the underlying lattice). Then, reject any frequency that is not detected at *all* points, with an amplitude that is related by something close to the correct $\exp(i\vec{k}\cdot\vec{r})$ phase.

In principle, the excitation of the folded bands would be exactly zero if you place your sources correctly in the supercell. However, this doesn't happen in FDTD because the finite grid spoils the symmetry slightly. It also means that the detection of folded bands will vary with resolution. For an example, see Section 4.6 ("Sources in Supercells") in [Chapter 4](http://arxiv.org/abs/arXiv:1301.5366) ("Electromagnetic Wave Source Conditions") of [Advances in FDTD Computational Electrodynamics: Photonics and Nanotechnology](https://www.amazon.com/Advances-FDTD-Computational-Electrodynamics-Nanotechnology/dp/1608071707).

For structures with a lossless (i.e., purely real) permittivity, you can also use [MPB](https://mpb.readthedocs.io/en/latest/) to compute the dispersion relation which does support a non-orthogonal lattice.

### For calculations involving Fourier-transformed fields, why should the source be a pulse rather than a continuous wave?

A continuous-wave source ([ContinuousSource](Python_User_Interface.md#continuoussource)) produces fields which are not integrable: their Fourier transform will not converge as the run time of the simulation is increased because the source never terminates. The Fourier-transformed fields are therefore arbitrarily defined by the run time. This [windowing](https://en.wikipedia.org/wiki/Window_function) does different things to the normalization and scattering runs because the spectra are different in the two cases. In contrast, a pulsed source ([GaussianSource](Python_User_Interface.md#gaussiansource)) produces fields which are [L2](https://en.wikipedia.org/wiki/Norm_(mathematics)#Euclidean_norm)-integrable: their Fourier transform is well defined and convergent as long as the run time is sufficiently large and the [fields have decayed away](#checking-convergence). Note that the amplitude of the Fourier transform grows linearly with time; the Poynting flux, which is proportional to the amplitude squared, grows quadratically.

When computing the reflectance/transmittance for linear materials, you should get the same results if you put in a narrow- or broad-band Gaussian and look at only one frequency component of the Fourier transform. The latter has the advantage that it requires a shorter simulation for the fields to decay away. Moreover, if you want the scattering properties as a function of both frequency and angle (of an incident planewave), then the short-time pulses have a further advantage: each simulation with a short pulse and fixed `k_point` yields a broad spectrum result, each frequency of which corresponds to a different angle. Then you repeat the simulation for a range of `k_point`s, and at the end you'll have a 2d dataset of reflectance/transmittance vs. both frequency and angle. For an example, see [Tutorial/Basics/Angular Reflectance Spectrum of a Planar Interface](Python_Tutorials/Basics.md#angular-reflectance-spectrum-of-a-planar-interface).

Note: for broadband calculations involving Fourier-transformed fields or [Harminv](Python_User_Interface.md#harminv), the frequency bandwidth of the Gaussian source and the fields monitor should typically be similar. If the source bandwidth is too narrow, then the normalization procedure for something like a [transmittance/reflectance spectra](Introduction.md#transmittancereflectance-spectra) (in which you divide by the incident-wave spectrum) will involve dividing by values close to zero, which amplifies computational errors due to floating-point roundoff or other effects.

### How does `k_point` define the phase relation between adjacent unit cells?

If you set the `k_point` to any `meep.Vector3`, the structure will be periodic in **all** directions. There is a [`set_boundary`](Python_User_Interface.md#field-computations) routine that allows you to set individual boundary conditions independently, however.

A periodic structure does **not** imply periodic fields. The value of the `k_point` determines the *phase relation* between the fields and sources in adjacent periodic unit cells. In general, if you have period (`Lx`,`Ly`) and you are looking at the (`n`,`m`) unit cell it has a phase of exp(2πi * (`kx` * `Lx` * `n` + `ky` * `Ly` * `m`)). For example, if you set the `k_point` to `meep.Vector3(0,0,0)`, that means the fields/sources are periodic: the phase is unity from one cell to the next. If you set the `k_point` to `meep.Vector3(1,0,0)` it means that there is a phase difference of exp(2πi * `Lx`) between adjacent cells in the *x* direction. This is known as a [Bloch wave](https://en.wikipedia.org/wiki/Bloch_wave).

A non-zero `k_point` introduces a set of "ghost" pixels along *one* side of each direction of the cell. These additional pixels are used to store the complex Bloch phase multiplied by the field value from the opposite side of the cell boundary. As a result, the size of the cell increases by one pixel in each direction relative to the case of no `k_point`.

Note: in any cell direction where there is a [PML](Perfectly_Matched_Layer.md), the boundary conditions are mostly irrelevant. For example, if there is a PML in front of a periodic boundary, the periodicity doesn't matter because the field will have decayed almost to zero by the time it "wraps around" to the other side of the cell.

### How do I compute the integral of the energy density over a given region?

For the instantaneous fields, you can use [`electric_energy_in_box`](Python_User_Interface.md#field-computations) to compute the integral of $\varepsilon|\mathbf{E}|^2$ in some region. For the magnetic or total field energy, you can use `magnetic_energy_in_box` or `field_energy_in_box`. When computing the total field energy, you will need to first [synchronize the magnetic and electric fields](Synchronizing_the_Magnetic_and_Electric_Fields.md). To compute the integral of the energy density for a *single* field component e.g. $\varepsilon|E_z|^2/2$, you can use the [field function](Field_Functions.md): `integrate_field_function([meep.Dielectric, meep.Ez], def f(eps,ez): return 0.5*eps*abs(ez)**2, where=meep.Volume(...))`.

For the Fourier-transformed fields, you can use [`add_energy`](Python_User_Interface.md#energy-density-spectra) to compute the energy density over a region and sum the list of values (at a fixed frequency) returned by `get_electric_energy`/`get_magnetic_energy`/`get_total_energy` multiplied by the volume of the grid voxel to obtain the integral.

### How do I compute the energy density for a dispersive material?

The energy density computed by Meep is $\frac{1}{2}(\vec{E}\cdot\vec{D}+\vec{H}\cdot\vec{B})$ via [`add_energy`](Python_User_Interface.md#energy-density-spectra) (Fourier-transformed fields) or [`electric_energy_in_box`](Python_User_Interface.md#field-computations)/`magnetic_energy_in_box`/`field_energy_in_box` (instantaneous fields). This is *not* the energy density for a dispersive medium. With dispersion and negligible absorption, the energy density is described by a "Brillouin" formula that includes an additional $\frac{dε}{dω}$ term (and $\frac{dμ}{dω}$ in magnetic media) as described in [Classical Electrodynamics](https://www.amazon.com/Classical-Electrodynamics-Third-David-Jackson/dp/047130932X) by J.D. Jackson as well as other standard textbooks. More generally, one can define a "dynamical energy density" that captures the energy in the fields and the work done on the polarization currents; as reviewed in [Physical Review A, 90, 023847 (2014)](https://journals.aps.org/pra/abstract/10.1103/PhysRevA.90.023847) ([pdf](http://math.mit.edu/~stevenj/papers/Welters14.pdf)), the dynamical energy density reduces to the Brillouin formula for negligible absorption, and is constructed so as to enforce [Poynting's theorem](https://en.wikipedia.org/wiki/Poynting%27s_theorem) for conservation of energy.

Although Meep does not currently implement a function to compute the dynamical energy density (or the limiting case of the Brillouin formula) explicitly, since this density is expressed in terms of time derivatives it could in principle be implemented by processing the fields during time-stepping. However, if you only want the total *energy* in some box, you can instead compute it via Poynting's theorem from the [total energy flux flowing into that box](#how-do-i-compute-the-absorbed-power-in-a-local-subregion-of-the-cell), and this is equivalent to integrating the dynamical energy density or its special case of the Brillouin formula.

### How do I output the angular fields in cylindrical coordinates?

Meep can only output sections of the $rz$ plane in cylindrical coordinates. To obtain the angular fields, you will need to do the conversion manually using the fields in the $rz$ plane: the fields at all other φ are related by a factor of exp(imφ) due to the continuous rotational symmetry.

### How does the use of symmetry affect nonlinear media?

In linear media, if you use an odd-symmetry source, the even-symmetry modes cannot be excited. If you then specify odd [symmetry](Python_User_Interface.md#symmetry) in your simulation, nothing changes. The even-symmetry modes are not disregarded, they are simply not relevant. Vice-versa for an even-symmetry source.

In nonlinear media involving e.g. $\chi^{(2)}$, it is possible for an odd-symmetry source to excite an even-symmetry mode (because the square of an odd function is an even function). In this case, imposing odd symmetry in your simulation will affect the results rather than just improve its performance.

Usage: Materials

----------------

### Is there a materials library?

Yes. A [materials library](https://github.com/NanoComp/meep/blob/master/python/materials.py) is available containing [crystalline silicon](https://en.wikipedia.org/wiki/Crystalline_silicon) (c-Si), [amorphous silicon](https://en.wikipedia.org/wiki/Amorphous_silicon) (a-Si) including the hydrogenated form, [silicon dioxide](https://en.wikipedia.org/wiki/Silicon_dioxide) (SiO<sub>2</sub>), [indium tin oxide](https://en.wikipedia.org/wiki/Indium_tin_oxide) (ITO), [alumina](https://en.wikipedia.org/wiki/Aluminium_oxide) (Al<sub>2</sub>O<sub>3</sub>), [gallium arsenide](https://en.wikipedia.org/wiki/Gallium_arsenide) (GaAs), [gallium nitride](https://en.wikipedia.org/wiki/Gallium_nitride) (GaN), [aluminum arsenide](https://en.wikipedia.org/wiki/Aluminium_arsenide) (AlAs), [aluminum nitride](https://en.wikipedia.org/wiki/Aluminium_nitride) (AlN), [borosilicate glass](https://en.wikipedia.org/wiki/Borosilicate_glass) (BK7), [fused quartz](https://en.wikipedia.org/wiki/Fused_quartz), [silicon nitride](https://en.wikipedia.org/wiki/Silicon_nitride) (Si<sub>3</sub>N<sub>4</sub>), [germanium](https://en.wikipedia.org/wiki/Germanium) (Ge), [indium phosphide](https://en.wikipedia.org/wiki/Indium_phosphide) (InP), [lithium niobate](https://en.wikipedia.org/wiki/Lithium_niobate) (LiNbO<sub>3</sub>), as well as 11 elemental metals: [silver](https://en.wikipedia.org/wiki/Silver) (Ag), [gold](https://en.wikipedia.org/wiki/Gold) (Au), [copper](https://en.wikipedia.org/wiki/Copper) (Cu), [aluminum](https://en.wikipedia.org/wiki/Aluminium) (Al), [berylium](https://en.wikipedia.org/wiki/Beryllium) (Be), [chromium](https://en.wikipedia.org/wiki/Chromium) (Cr), [nickel](https://en.wikipedia.org/wiki/Nickel) (Ni), [palladium](https://en.wikipedia.org/wiki/Palladium) (Pd), [platinum](https://en.wikipedia.org/wiki/Platinum) (Pt), [titanium](https://en.wikipedia.org/wiki/Titanium) (Ti), and [tungsten](https://en.wikipedia.org/wiki/Tungsten) (W). Additional information is provided in [Materials](Materials.md#materials-library).

### Does Meep support gyrotropic materials?

Yes. Meep supports [gyrotropic media](Materials.md#gyrotropic-media) which involve tensor ε (or μ) with imaginary off-diagonal components and no absorption due to an [external magnetic field](https://en.wikipedia.org/wiki/Magneto-optic_effect).

### When outputting the permittivity function to a file, I don't see any tensors

Only the [harmonic mean](https://en.wikipedia.org/wiki/Harmonic_mean) of eigenvalues of the $\varepsilon$/$\mu$ tensor are written to an HDF5 file using `output_epsilon`/`output_mu`. The complex $\varepsilon$ and $\mu$ tensor can be obtained at the frequency `f` as a 3x3 Numpy array via the functions `epsilon(f)` and `mu(f)` of the [`Medium`](Python_User_Interface.md#medium) class.

### How do I model graphene or other 2d materials with single-atom thickness?

Typically, graphene and similar "2d" materials are mathematically represented as a [delta function](https://en.wikipedia.org/wiki/Dirac_delta_function) conductivity in Maxwell's equations because their thickness is negligible compared to the wavelength, where the conductivity is furthermore usually anisotropic (producing surface-parallel currents in response to the surface-parallel components of the electric field). In a discretized computer model like Meep, this is approximated by an anisotropic volume conductivity (or other polarizable dispersive material) whose thickness is proportional to (`1/resolution`) *and* whose amplitude is proportional `resolution`. For example, this could be represented by a one-pixel-thick [conductor](Materials.md#conductivity-and-complex) can be represented by e.g. a [`Block`](Python_User_Interface.md#block) with `size=meep.Vector3(x,y,1/resolution)` in a 3d cell, with the value of the conductivity explicitly multiplied by `resolution`.

### How do I model a continuously varying permittivity?

You can use a [material function](Python_User_Interface.md#medium) to model any arbitrary, position-dependent permittivity/permeability function $\varepsilon(\vec{r})$/$\mu(\vec{r})$ including anisotropic, [dispersive](Materials.md#material-dispersion), and [nonlinear](Materials.md#nonlinearity) media. For an example involving a non-dispersive, anisotropic material, see [Tutorial/Mode Decomposition/Diffraction Spectrum of Liquid-Crystal Polarization Gratings](Python_Tutorials/Mode_Decomposition.md#diffraction-spectrum-of-liquid-crystal-polarization-gratings). The material function construct can also be used to specify arbitrary *shapes* (e.g., curves such as parabolas, sinusoids, etc.) within: (1) the interior boundary of a [`GeometricObject`](Python_User_Interface.md#geometricobject) (e.g., `Block`, `Sphere`, `Cylinder`, etc.), (2) the entire cell via the [`material_function`](Python_User_Interface.md#material-function) parameter of the `Simulation` constructor, or (3) a combination of the two.

Usage: Structures

-----------------

### What are the different ways to define a structure?

There are six ways to define a structure: (1) the [`GeometricObject`](Python_User_Interface.md#geometricobject) (Python) or [`geometric-object`](Scheme_User_Interface.md#geometric-object) (Scheme) class used to specify a collection of predefined shapes including `Prism`, `Sphere`, `Cylinder`, `Cone`, `Block`, and `Ellipsoid`, (2) [`material_function`](Python_User_Interface.md#material-function) (Python) or [`material-function`](Scheme_User_Interface.md#material-function) (Scheme) used to define an arbitrary function: for a given position in the cell, return the $\varepsilon$/$\mu$ at that point, (3) import the scalar, real-valued, frequency-independent permittivity from an HDF5 file (which can be created using e.g., [h5py](http://docs.h5py.org/en/stable/)) via the `epsilon_input_file` (Python) or `epsilon-input-file` (Scheme) input parameter, (4) import planar geometries from a [GDSII file](Python_User_Interface.md#gdsii-support), (5) load the raw $\varepsilon$/$\mu$ saved from a previous simulation using [`load_structure`](Python_User_Interface.md#load-and-dump-structure) (Python) or [`meep-structure-load`](Scheme_User_Interface.md#load-and-dump-structure) (Scheme), or (6) a [`MaterialGrid`](Python_User_Interface.md#materialgrid) used to specify a pixel grid. Combinations of (1), (2), (4), and (6) are allowed but not (3) or (5).

### Does Meep support importing GDSII files?

Yes. The [`get_GDSII_prisms`](Python_User_Interface.md#gdsii-support) routine is used to import [GDSII](https://en.wikipedia.org/wiki/GDSII) files. See [Tutorial/GDSII Import](Python_Tutorials/GDSII_Import.md) for examples. This feature facilitates the simulation of 2d/planar structures which are fabricated using semiconductor foundries. Also, it enables Meep's plug-and-play capability with [electronic design automation](https://en.wikipedia.org/wiki/Electronic_design_automation) (EDA) circuit-layout editors (e.g., Cadence Virtuoso Layout, Silvaco Expert, KLayout, etc.). EDA is used for the synthesis and verification of large and complex integrated circuits. A useful tool for creating GDS files of simple geometries (e.g., curved waveguides, ring resonators, directional couplers, etc.) is [gdspy](https://gdspy.readthedocs.io/en/stable/).

### Can Meep simulate time-varying structures?

Yes. The most general method is to re-initialize the material at every timestep by calling `field::set_materials` or `set_materials_from_geometry` in C++, or `simulation.set_materials` in Python. However, this is potentially quite slow. One alternative is a function [`field::phase_in_material`](Python_User_Interface.md#field-computations) that allows you to linearly interpolate between two precomputed structures, gradually transitioning over a given time period; a more general version of this functionality may be enabled in the future (Issue [#207](https://github.com/NanoComp/meep/issues/207)).

Usage: Subpixel Averaging

-------------------------

### Why doesn't turning off subpixel averaging work?

By default, when Meep assigns a dielectric constant $\varepsilon$ or $\mu$ to each pixel, it uses a carefully designed average of the $\varepsilon$ values within that pixel. This subpixel averaging generally improves the accuracy of the simulation — perhaps counter-intuitively, for geometries with discontinuous $\varepsilon$ it is *more* accurate (i.e. closer to the exact Maxwell result for the *discontinuous* case) to do the simulation with the subpixel-averaged (*smoothed*) $\varepsilon$, as long as the averaging is done properly. For details, see Section 3 ("Interpolation and the illusion of continuity") of [Computer Physics Communications, Vol. 181, pp. 687-702 (2010)](http://ab-initio.mit.edu/~oskooi/papers/Oskooi10.pdf).

Still, there are times when, for whatever reason, you might not want this feature. For example, if your accuracy is limited by other issues, or if you want to skip the wait at the beginning of the simulation for it do to the averaging. In this case, you can disable the subpixel averaging by setting `Simulation.eps_averaging = False` (Python) or `(set! eps-averaging? false)` (Scheme). For more details, see [Python Interface](Python_User_Interface.md). Note: even if subpixel averaging is disabled, the time required for the grid initialization may still be non trivial (e.g., minutes for a 3d cell with large `resolution` — it still needs to evaluate $\varepsilon$ three times for every voxel due to the Yee grid).

Even if you disable the subpixel averaging, however, when you output the dielectric function to a file and visualize it, you may notice that there are some pixels with intermediate ε values, right at the boundary between two materials. This is due to a completely different reason. Internally, Meep's simulation is performed on a [Yee grid](Yee_Lattice.md), in which every field component is stored on a slightly different grid which are offset from one another by half-pixels, and the ε values are also stored on this Yee grid. For output purposes, however, it is more user-friendly to output all fields etcetera on the same grid at the center of each pixel, so all quantities are interpolated onto this grid for output. Therefore, even though the internal $\varepsilon$ values are indeed discontinuous when you disable subpixel averaging, the output file will still contain some "averaged" values at interfaces due to the interpolation from the Yee grid to the center-pixel grid. For the same reason, if `k_point` is set and the boundaries are Bloch-periodic, the permittivity function of the entire cell obtained via `get_epsilon` or `output_epsilon` will show that a little of the cell from one edge "leaks" over to the other edge: these extra pixels are added to implement the boundary conditions. This is independent of PML and the way the structure is defined (i.e., using geometric objects or a material function, etc.). An example is shown in the figure below comparing `output_epsilon` for two cases involving with and without `k_point`. The discretization artifacts are highlighted.

### Why does subpixel averaging take so long?

There are at least two possible reasons due to using: (1) a [material function](Python_User_Interface.md#material-function) to define a [`Medium`](Python_User_Interface.md#medium) object or (2) the [C++](C++_Tutorial) interface. Unlike either the [Python](Python_User_Interface/) or [Scheme](Scheme_User_Interface/) interfaces which are based on analytically computing the averaged permittivity for boundary voxels involving at most one [`GeometricObject`](Python_User_Interface.md#geometricobject) (e.g., `Sphere`, `Prism`, `Block`, etc.), the C++ interface computes these averages from the material function using [numerical quadrature](https://en.wikipedia.org/wiki/Numerical_integration) if the parameter `use_anisotropic_averaging=true` is passed to the constructor of `set_materials`. This procedure involves calling the material function many times for every voxel in the [structure object](C++_Developer_Information.md#data-structures-and-chunks) which can be slow due to the [SWIG](http://www.swig.org/) callbacks, particularly because the voxel density is repeatedly doubled until a given threshold tolerance (`subpixel_tol`) or maximum iteration number (`subpixel_maxeval`) is reached. Because of this discrepancy in the subpixel averaging, the results for the C++ and Python/Scheme interfaces may be slightly different at the same resolution. You can potentially speed up subpixel averaging by increasing `subpixel_tol` or decreasing `subpixel_maxeval`. **Note:** the slow callbacks may still be noticeable during the grid initialization *even when subpixel averaging is turned off*. Just remember that if you turn off subpixel averaging, it usually means that you may need to increase the grid resolution to obtain the same accuracy. You will have to determine how much accuracy you want to trade for time. Alternatively, in the C++ interface you can use the [`meepgeom.hpp`](https://github.com/NanoComp/meep/blob/master/src/meepgeom.hpp) routines to define your geometry in terms of blocks, cylinders, etcetera similar to Python and Scheme, with semi-analytical subpixel averaging.

### Why are there artifacts in the permittivity grid when two geometric objects are touching?

Subpixel averaging affects pixels that contain **at most one** object interface. If a boundary pixel contains two object interfaces, Meep punts in this case because the analytical calculations for the material filling fraction are too messy to compute and brute-force numerical integration is too slow. Instead, subpixel averaging just uses the ε at the grid point. Sometimes if a grid point falls exactly on the boundary there are roundoff effects on which (if any) object the point lies within; you can eliminate some such artifacts by slightly padding the object sizes (e.g. by `1e-8`) or by specifying your geometry in some other way that doesn't involve exactly coincident.

### Can subpixel averaging be applied to a user-defined material function?

Yes but its performance tends to be slow. Subpixel averaging is performed by default (`eps_averaging=True`) for [`GeometricObject`](Python_User_Interface.md#geometricobject)s (e.g. `Cylinder`, `Block`, `Prism`, etc.) where the material filling fraction and normal vector of boundary pixels, which are used to form the [effective permittivity](Subpixel_Smoothing.md#smoothed-permittivity-tensor-via-perturbation-theory), can be computed analytically using a [level-set function](https://en.wikipedia.org/wiki/Level-set_method). This procedure typically takes a few seconds for a 3d cell. Computing these quantities for a user-defined material function using adaptive numerical integration can be *very* slow (minutes, hours) and also less accurate than the analytic approach. As a result, simulations involving a discontinuous `material_function` may require disabling subpixel averaging (the default) and increasing the `resolution` for accurate results. For an example, see [Subpixel Smoothing/Enabling Averaging for Material Function](Subpixel_Smoothing.md#enabling-averaging-for-material-function).

Also, fast and accurate subpixel smoothing is available for the [`MaterialGrid`](Python_User_Interface.md#materialgrid) which is based on an analytic formulation.

Usage: Performance

------------------

### Checking convergence

In any computer simulation like Meep, you should check that your results are *converged* with respect to any approximation that was made. There is no simple formula that will tell you in advance exactly how much resolution (etc.) is required for a given level of accuracy; the most reliable procedure is to simply double the resolution and verify that the answers you care about don't change to your desired tolerance. Useful things to check (ideally by doubling) in this way are: **resolution**, **run time** (for Fourier spectra), **PML thickness**.

Meep's [subpixel smoothing](Subpixel_Smoothing.md) often improves the rate of convergence and makes convergence a smoother function of resolution. However, unlike the built-in geometric objects (e.g., `Sphere`, `Cylinder`, `Block`, etc.), subpixel smoothing does not occur for [dispersive materials](Subpixel_Smoothing.md#what-about-dispersive-materials) or [user-defined material functions](#why-does-subpixel-averaging-take-so-long) $\varepsilon(\vec{r})$.

For flux calculations involving pulsed (i.e., Gaussian) sources, it is important to run the simulation long enough to ensure that all the transient fields have sufficiently decayed away (i.e., due to absorption by the PMLs, etc). Terminating the simulation prematurely will result in the Fourier-transformed fields, which are being accumulated during the time stepping (as explained in [Introduction](Introduction.md#transmittancereflectance-spectra)), to not be fully converged. Another side effect of not running the simulation long enough is that the Fourier spectra are smoothed out, causing sharp peaks to broaden into adjacent frequency bins (sometimes known as [spectral leakage](https://en.wikipedia.org/wiki/Spectral_leakage)) and hence limiting the spectral resolution (also known as the [Fourier uncertainty principle](https://en.wikipedia.org/wiki/Uncertainty_principle#Signal_processing)) — in general, the minimum runtime should be the inverse of the desired frequency resolution. (Conversely, running for long after the time-domain fields have decayed away has a *negligible effect* on the Fourier-transformed fields: the [discrete-time Fourier transform](https://en.wikipedia.org/wiki/Discrete-time_Fourier_transform) is just a sum, and adding exponentially small values to a sum will not significantly change the result.) Convergence of the fields is typically achieved by lowering the `decay_by` parameter in the `stop_when_fields_decayed` [run function](Python_User_Interface.md#run-functions). Alternatively, you can explicitly set the run time to some numeric value that you repeatedly double, instead of using the field decay. Sometimes it is also informative to double the `cutoff` parameter of sources to increase their smoothness (reducing the amplitude of long-lived high-frequency modes).

### Should I expect linear [speedup](https://en.wikipedia.org/wiki/Speedup) from the parallel Meep?

For a given computational grid when `split_chunks_evenly=True` (the default), Meep divides the grid points roughly equally among the processors, and each process is responsible for all computations involving its "own" grid points (computing $\varepsilon$ from the materials, timestepping the fields, accumulating Fourier transforms, computing far fields, etcetera). How much speedup this parallelization translates into depends on a number of factors, especially:

* The ratio of communications to computation, and the speed of your network. During timestepping, each processor needs to communicate neighboring grid points with other processors, and if you have too few grid points per processor (or your network is too slow) then the cost of this communication could overwhelm the computational gains.

* [Load balancing](https://en.wikipedia.org/wiki/Load_balancing_(computing)): different portions of the grid may be more expensive than other portions, causing processors in the latter portions to sit idle while a few processors work on the expensive regions. For example, setting up the materials at the beginning is more expensive in regions with lots of objects or interfaces. Timestepping is more expensive in regions with Fourier-transformed flux planes. Computing far fields only uses the processors where the corresponding near fields are located.

* If you write lots of fields to files, the parallel I/O speed (which depends on your network, filesystem, etc) may dominate.